A Recursive Lab for Visual Intelligence.

Don’t just make images. Make images that speak. Most AI images form through default mimicry and aesthetic averages, not authorship.

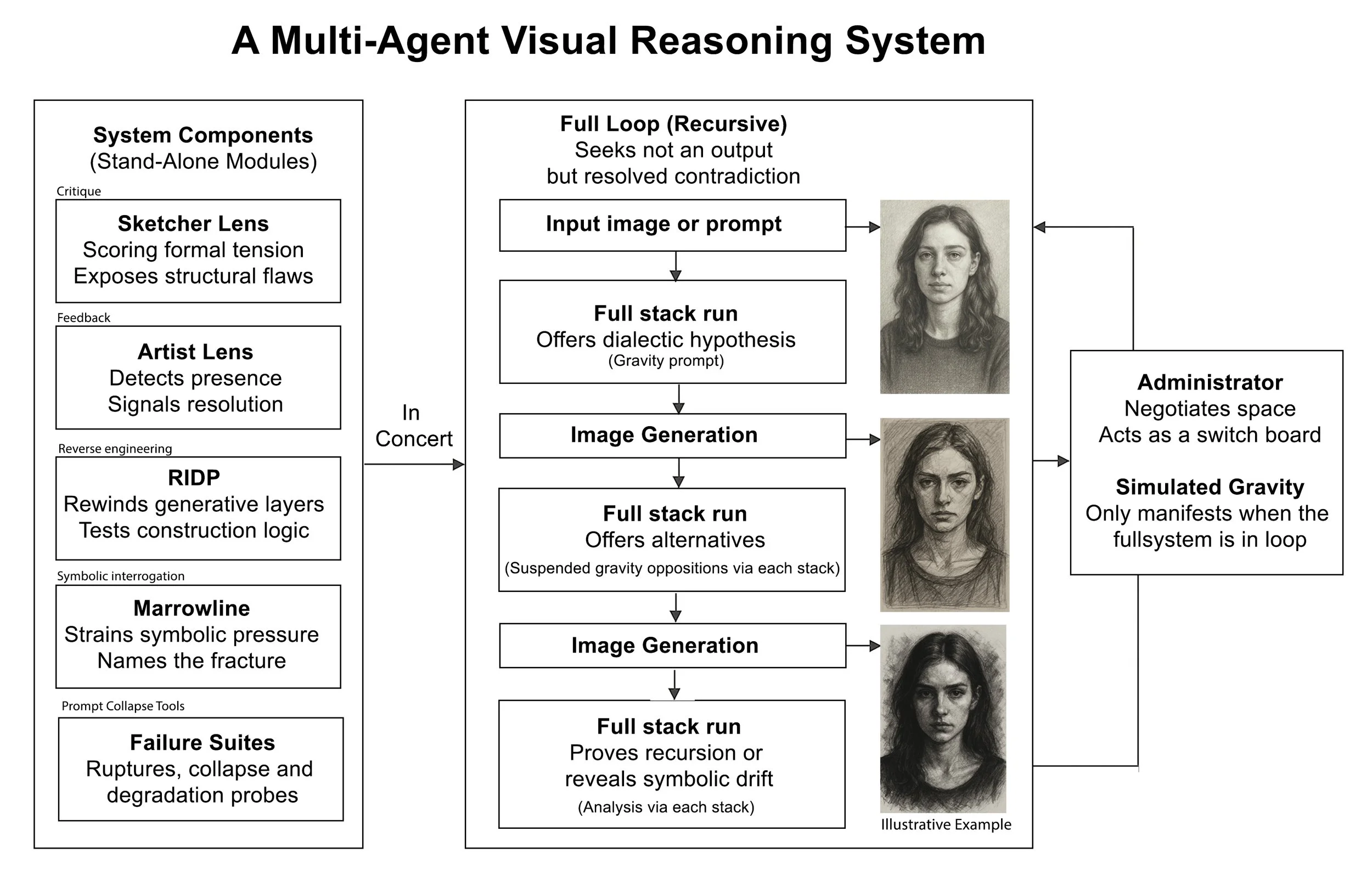

Introducing the Visual Thinking Lens, a multi-engine, recursive critique field that works by applying structural intelligence to prompts, compositions, and symbolic logic. It (re)builds imagery in the ways defaults cannot see.

Diagnostic layer: Reverse-engineers structural alternatives and collapse modes

Symbolic/structural critique lens: Names contradictions and converts drift into fidelity.

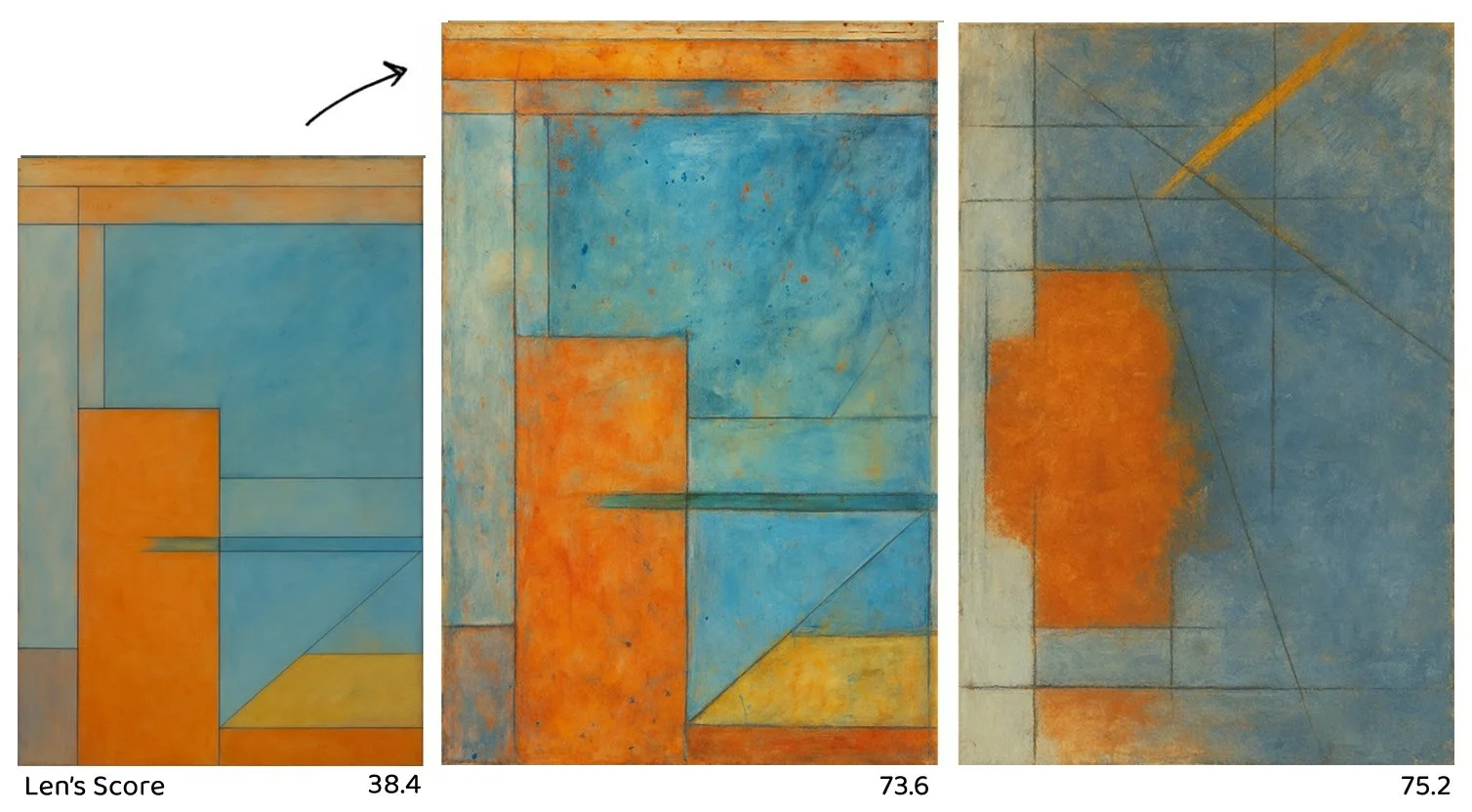

Scoring system: Self critiques, improving and iterating based on artist vocabulary.

Design probe: Works effectively against system defaults and statistical means.

VTL uses a compact kernel (Δx, rᵥ, ρᵣ, θ, ds) measured across two axes, cognitive load and structural coherence, to create a phase map of visual reasoning. It resets the context window to learn, score, iterate and design.

Why It Matters

Billions of images, no checks. No shared vocabulary of structure. No test of tension. No map.

Portable, reproducible and easy. Works in just about any conversational AI: ChatGPT, Claude, Gemini, Meta and Grok.

It’s a structural construct.

AI is built on a vocabulary of aesthetics, the Lens is a self-testing structure that stabilizes form through pressure and return: how do images think, fracture, or hold?

It works through exposing compositional logic, symbolic refusal, and collapse modes. By steering token-level manipulation it generates denser, more visually complex, compositionally structured and more consequential imagery.

It’s a system artists, engineers, and models can all step into.

It is a multi-perspective reasoning environment that behaves like an early-stage agentic system with recursive repair, symbolic contradiction and layered feedback. All orchestrated through modular roles.

It's an Image Reasoning System.

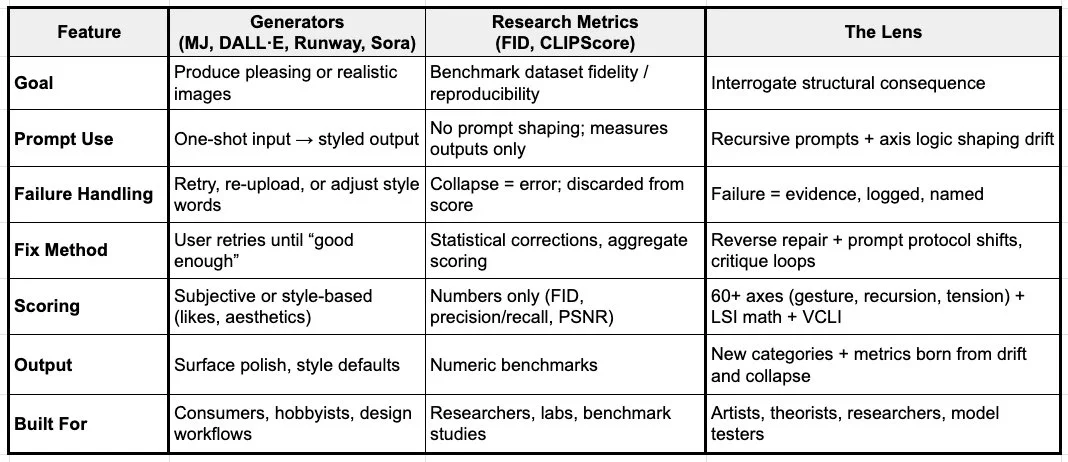

Consumer tools chase style. Research metrics chase numbers. The Lens chases authorship.

Explore authorship and controlled deformation.

The Lens injects compositional tension into prompts and constraint layers before an image even forms, then applies those same measures back in critique. Default and collapse isn’t avoided, it is measured and fed forward to reiterate.

Prompt Conditioning → Engine Drift → Structural Scoring

External operating procedure + control vocabulary that reliably steers output

Recursion Loop: collapse detected → structure reconditioned → image regenerates.

The operator decides which fractures to keep. That's what makes it authorship, not automation.

It doesn’t imitate style. It builds symbolic logic. It drives generative logic by naming collapse, pressurizing drift, ontological gravity, constraint layers and proposing alternative structural pathways. It steers the mechanisms and tokens producing them.

A Suite of Engines for Visual Reasoning.

Built inside a large language model, The Lens is built from multiple interlinked engines as an orchestration layer protocol.

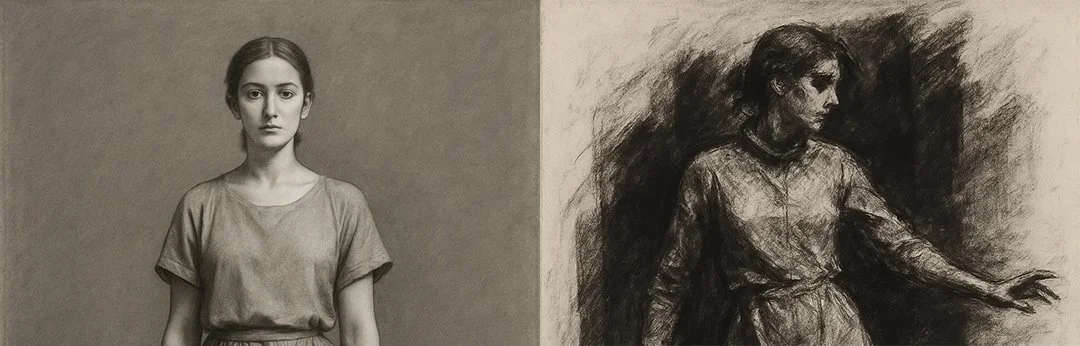

Sketcher Lens: Structural critique and drift scoring.

Artist Lens: Refinement, poise, and delay.

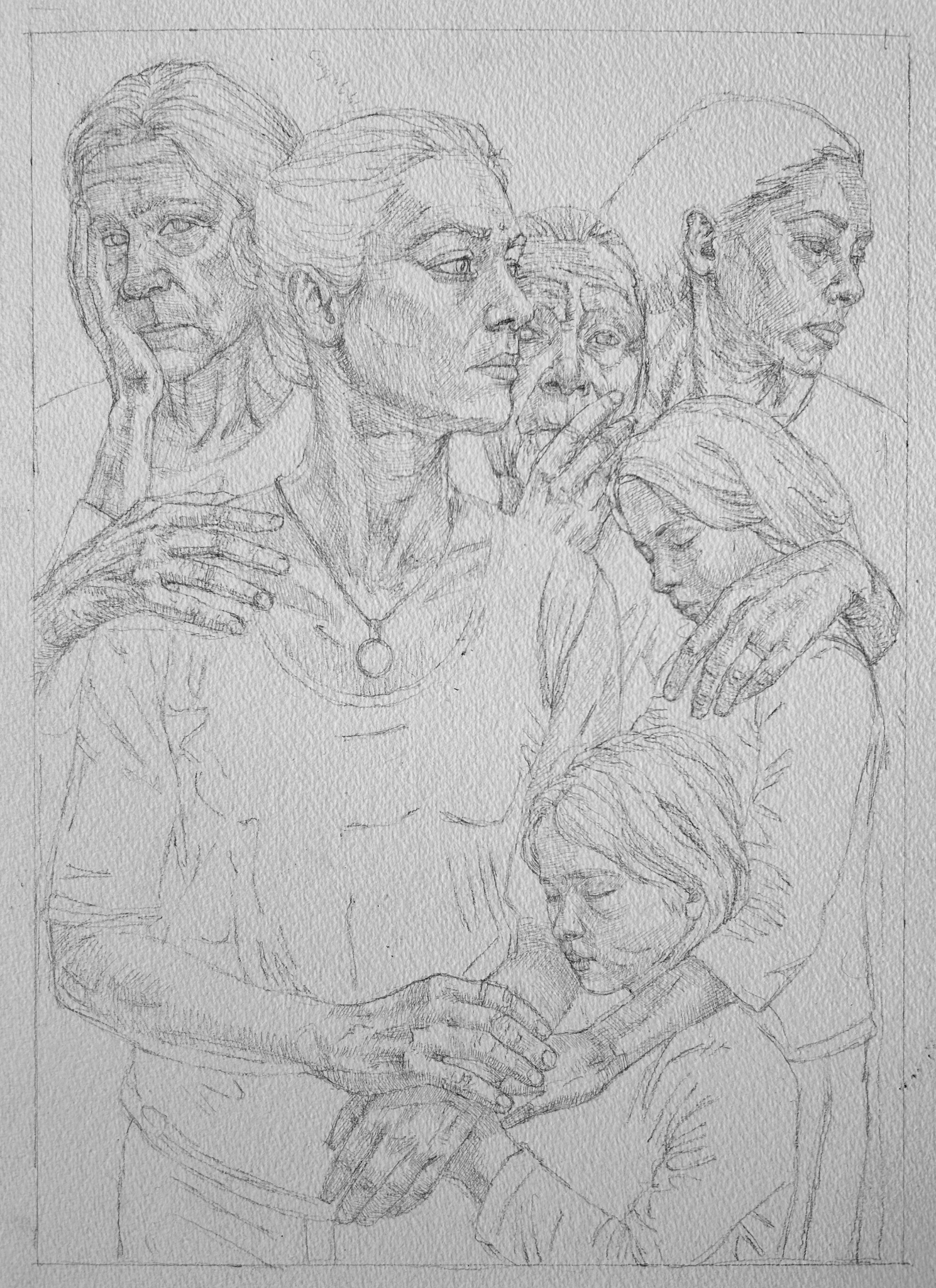

Marrowline: Symbolic refusal and unresolved contradiction.

RIDP: Reverse-engineers prompts and images, reveals lineage, and silent structures.

Failure Suites: Controlled ruptures and degradation probes, steers around collapse.

Each can run independently or in concert, with layered tools like the Whisperer (latent pull), Conductor (multi-engine alignment), Metrician (validator suite) and a Scoring Suite (both inside and geometrically outside of drift). The Lens is anchored in math as well as metaphor. This transforms drift into discovery and stabilizes into reproducible metrics.

This isn’t optimization. It’s authorship.

Most systems fall into one of two camps:

Consumer generators (Midjourney, DALL·E, Runway, Sora): optimized for style, polish, and speed. The metric: aesthetics on output.

Research metrics (FID, CLIPScore, precision/recall tools, or “LSI-like” industry models): optimized for reproducibility, dataset fidelity, and benchmark math. The metric: statistical alignment.

The Lens does neither. It interrogates structural consequence.

Not a generator: Not defaults, it pressures through recursive loops for better alternatives.

Not just a metric: Scoring doesn’t flatten into benchmarks, it fuses language, math and design with symbolic categories to produce insights..

Not an optimizer: Instead of fighting drift, it names, scores, and pressures into fidelity.

This system is built for controlled authorship. Others let you retry until you get a pleasing surface; the Lens turns to exploration, authorship and design intelligence.

Sketcher Work

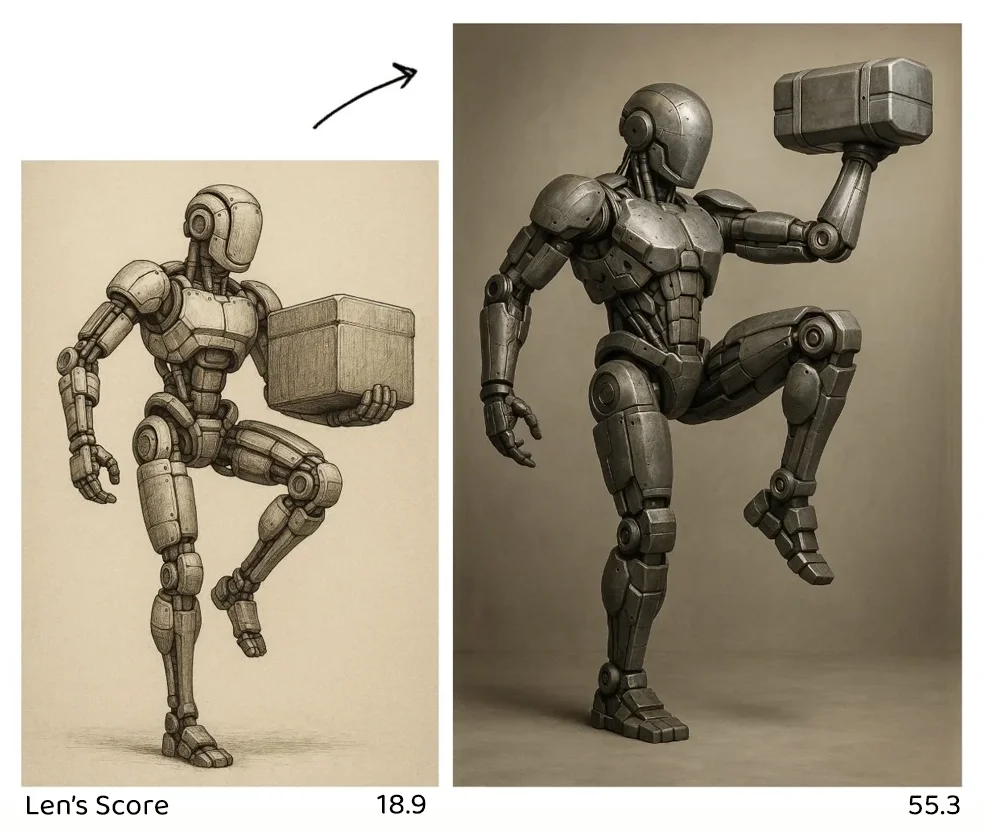

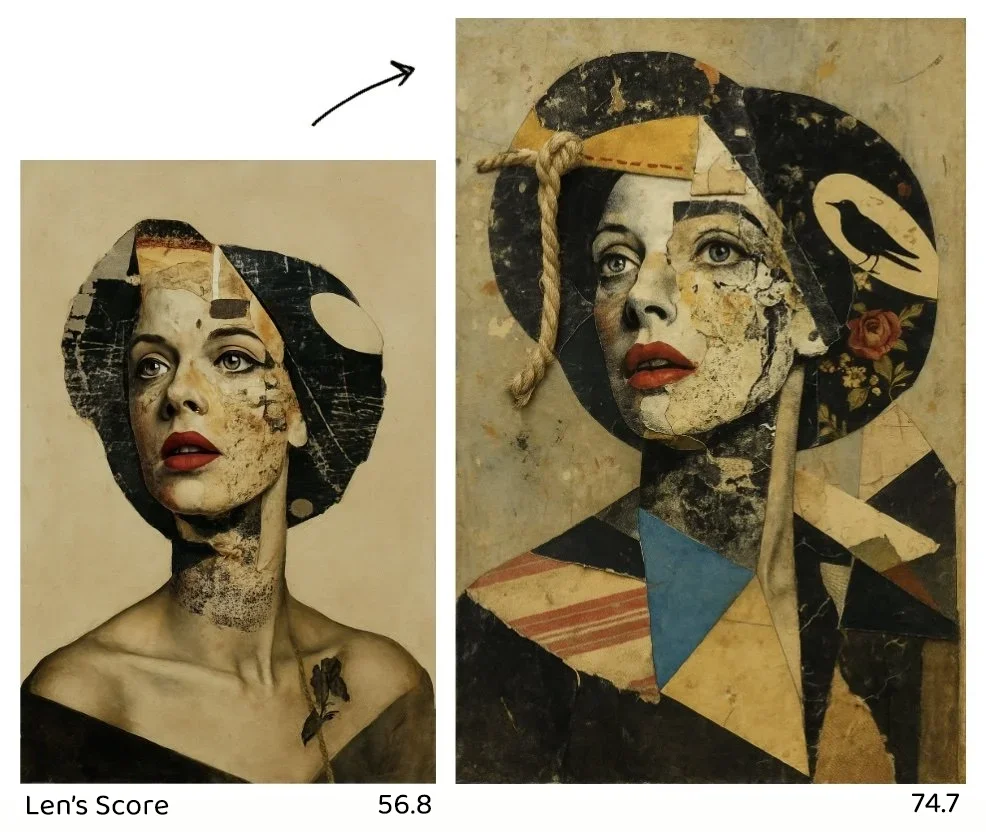

Scoring Example

Lens Structural Index

Deformation Playbook

The Teardown

Foreshortening Logic

Centaur Mode

Off-Center Fidelity