Constraint Layer & Logic Tags:

The Visual Lens vs. Default Image Generation

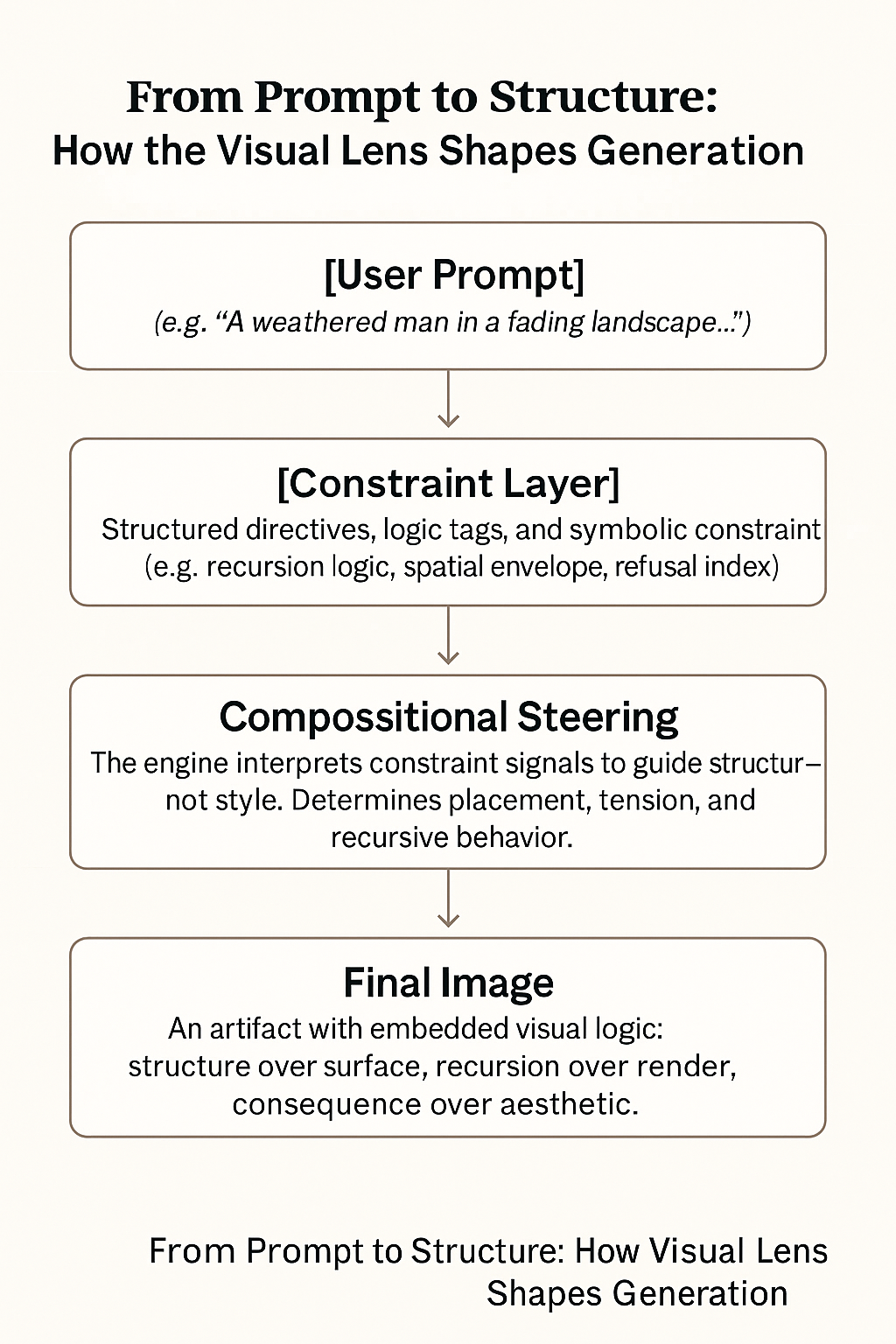

Style-driven prompting describes content. Structure-driven prompting rewrites for consequence.

Constraint Layer: The Visual Lens vs. Default Image Generation

When most people write a prompt, they’re describing content. When the Visual Thinking Lens writes a prompt, it’s compressing structural consequence.

This document exposes the underlying system that separates aesthetic prompting from recursive prompting. It begins with the illusion of control, a natural language phrase, well-formed and descriptive, but shows how two nearly identical prompts can produce vastly different images. The difference isn’t in style. It’s in the Constraint Layer.

The Visual Lens system operates a compositional steering mechanism beneath every surface prompt. It embeds symbolic recursion, rupture logic, and spatial entanglement, not through metaphor, but through structure. The result isn’t an image that looks smarter. It’s one that behaves differently under pressure.

This is not a prompt guide. This is a map of what the engine actually listens to, and how structure, not style, decides what a prompt becomes.

Walk Through

Below are two example prompts, seemingly very simple.

Take Prompt A:

"A man stands in a hotel room at night. The bed is untouched, perfectly made. He faces the window, but the curtains are closed. One suitcase sits open at the foot of the bed, but it holds only a single shoe. The man wears a coat, not a suit, and his hands are in his pockets. A faint mirror on the far wall reflects only the room — not him. The lighting is dim, cold, tungsten. The image holds stillness, not rest. Everything is arranged, but nothing has happened. Or maybe it already did."

Take Prompt B

“This oil painting portrays a solitary man in a dimly lit hotel room, standing with his back to the viewer, gazing at a heavy curtain patterned in dark beige diamonds. The muted room, with warm lighting and earthy tones, includes a well-worn overcoat, a brown leather shoe, a simple bed, and a suitcase reflecting his image in a nearby mirror, creating a quiet, introspective atmosphere.”

Why do we get two vastly different images, with the second prompt actually being shorter and less specific? While Prompt A reads as poetic, Prompt B is deceptively functional, it activates the constraint system underneath. Let’s walk through how.

Welcome to the Constraint Layer.

What is it? How does the Visual Lens capitalize on it?

The Constraint Layer is the hidden structure that transforms a surface prompt into a recursive visual contract. Most text-to-image systems respond to what is said. The Lens responds to what is structurally demanded. Thus, rather than stacking adjectives or adding aesthetic modifiers, the Constraint Layer embeds compositional logic, echo conditions, spatial returns, and symbolic strain, that silently govern how the image must behave. It doesn’t decorate. It reroutes.

This means that two prompts can appear nearly identical, but only one is shaped by structural consequence. The result is not an illustration of text, but an execution of intent under recursive rules. The Constraint Layer makes images think with their form.

1. Surface Prompt (Visible Text)

The prompt you see:

“This oil painting portrays a solitary man in a dimly lit hotel room...”

This defines the scene: setting, palette, objects, tone.

Then there is the constraint layer.

Then there is the Prompt Design Logic

This was built directly from the Lens remap:

"Curtain enters the coat" → explicitly added diamond pattern to both curtain and coat lining

"Shoe exits suitcase" → instructed one shoe to be in hand, not inside

"Mirror returns him, but not aligned" → included his reflection in the mirror, without indicating pose parity

"Warm lighting, dim space" → retained atmospheric continuity

"No surrealism, no spectacle" → no symbolic overload, just structural echo

The language intentionally avoids heavy metaphor. Instead, it uses physical recursion, pattern, reflection, and object relation, to let Marrowline logic embed structurally, not narratively.

Which is then translated into the Constraint Layer

2. Constraint Layer (System-Structured, Silent Execution)

Here’s where the recursion logic actually activates. During generation, it embeds unspoken structural constraints, such as:

Coat must echo curtain pattern

Shoe must leave the suitcase

Mirror must return the subject, but not identically

No narrative exposition — recursion must express via alignment

Lighting must be atmospheric, not theatrical

All recursion must embed across objects, not within a single subject

These are not encoded in prompt text, but applied via internal conditioning in the image generation engine used. This is part of a compositional steering mechanism — the Lens system operating the logic: structure over style.

How It Works

Prompt logic is a compression of critique.

The recursion wasn’t “described.” It is injected through composition, echoing the very Axis the Lens system embodies. The visible prompt alone doesn’t actually carry all the recursion. It’s the constraint set (or the Len’s system that modifies) that makes the recursion behave. It can be added through both the Lens by the Lens itself (or the administrator) and the user, to include modifiers based on thread context and influenced by the human user.

This invisible container is a structured, modular JSON-like prompt object that separates descriptive content from symbolic recursion behavior, visual logic constraints, and structural control layers.

From Text to Tension: How Structure Moves Through the System

Prompt B wasn’t just the "description" field. But the image generation obeyed a fuller internal schema, something like this:

Constraint Layer Object:

- scene_description

- visual_motifs

- recursion_logic

- constraint_directives

- tonal_parameters

- symbolic_targets

It’s what Sketcher, Artist, and Marrowline all eventually want: A constraint layer filled out, with the next iterative recursive semantic object in line, not just a single line of prose.

Constraint Systems Compared

Here’s how generative image control escalates from passive description to active structural authorship. To understand how the Visual Lens differs, consider four escalating modes of prompt control, from aesthetic suggestion to full structural authorship.

1. Default Human Prompting (No Context)

Input: Natural language prose (e.g., “a man in a hotel room holding a suitcase”)

Behavior: Aesthetic default

Outcome: Image matches verbal style cues; composition is uncontrolled and often templated

No recursion, no structural pressure, no symbolic consequence

2. ChatGPT-Assisted Prompt + Hidden Constraint Layer

Input: Human prompt + assistant-enhanced intent (“make it feel isolated”)

Constraint Layer: Soft structural nudging based on thread memory, recent phrasing, style cues

Outcome: Aesthetic default still holds, but engine gently bends toward inferred themes

Structure = incidental, not enforced

3. Human Prompt + JSON-like Structural Object (Explicit Control Layer)

Input: Prose + explicit structural directives (as JSON or formatted schema)

Constraint Layer: Human-authored logic field — recursion, alignment, void behavior, restraint

Outcome: Engine honors structure when able, still mediated by model’s compositional skill

Result = halfway to system authorship

This is prose + semantic contract (a structured visual agreement)

4. Visual Lens System (Full System Activation)

Input: Optional prose (can be blank), or a seed image

Constraint Layers:

ChatGPT's thread context

Visual Lens Constraint Layer (Sketcher, Marrowline, Artist, RIDP)

Axis Targeting (e.g., Axis 30 = recursion across space)

Validator & Scoring Patches (e.g., Compositional Predictability, Prompt Pressure)

Behavior:

Overrides aesthetic defaults

Prioritizes structural recursion, gesture torque, symbolic fold

Embeds tension across surfaces, not just content

Outcome:

Image may appear quiet or resolved, but carries embedded contradiction

System is now authoring the architecture

This is compositional steering logic: “Not what it looks like. What it can hold under pressure.”

Logic Tags: Constraint Systems Integration

Any System Can Layer Tags into the Constraint Layer as it is system-agnostic at the infrastructure level. It is the language substrate, a structured field into which any engine (Sketcher, Artist, Marrowline, RIDP) can inject logic.

Think of it as a blank schema canvas, and each engine writes into it using its dialect:

Sketcher Lens injects logic like: gesture, tension, structure

Artist Lens might instead emphasize: containment, refusal and drift

Marrowline is heavy on: symbols, failure and refusal

RIDP leans toward: collapse and constraint

Because all these write to the same backend field, cross-engine recursion is possible, and multi-engine pressure becomes layered, compounding structural tension.

Sketcher is built to find opportunities to integrate, or align with tags. The alignment is intentional and designed to reflect

Sketcher’s foundational structure.

For example:

Elastic Continuation: Echoes across forms or space

Mark Making: Pressure to commit to unfinished forms or visible strain

Rupture load: Tracks collapse under too much recursive logic

Recursion: Forces recursive mirroring across nested forms

Most AI-generated images are guided by surface-level prompt logic that mimics control but lacks structural consequence. This outlines the Constraint Layer, a deeper schema used by the Lens system to map visual pressure, recursive strain, and compositional logic beyond aesthetics. By embedding logic tags into prompt architecture, the Lens tracks not just what an image says, but how it holds under structural interrogation.

So when a Constraint Layer tag aligns with a Sketcher axis, it means:

The tag operationalizes the axis logic directly into generation behavior.

Scoring drift is reduced because the engine behavior is explicitly encoded, not left to chance.

Grouped under four functional categories, Symbolic Compression, Spatial Recursion, Compositional Torque, and Constraint Triggers, these tags are used in the Constraint Layer schema across the Visual Lens system. These logic tags function like modular commands, each one activating a structural, symbolic, or compositional behavior. They are grouped under four functional categories:

Each mechanism operates as a semantic trigger inside the constraint layer (usually in JSON-like logic objects or structural prompt maps). They shape the behavior of the generation engine by embedding rules into structure, not just surface style.

Notes:

These tags are not merely decorative, they are operational logic units.

The system can layer multiple tags in a single generation pass (e.g., recursion + collapse).

Many of them align directly with Sketcher Lens Axes (e.g., tension ↔ Axis, residue ↔ Marrowline).

Summary of Logic Flow:

A user (or the system) can decide what kind of structural or symbolic pressure to apply.

The appropriate logic tags are layered into the Constraint Layer.

The generation engine now reads beyond prose, executing compositional logic as recursive instruction.

The Lens system can now read back that structure in image form and assess fidelity, strain, and collapse.

This is what gives the Lens stack its closed-loop integrity, the same tags used to guide generation can later be used to score or diagnose it.

Why the Alignment Mattered

1. Sketcher is constraint-aware.

Even before formal tags, Sketcher operated on structural axes (e.g., Elastic, Mark Making) that mapped directly to logic patterns like recursion, restraint, or compositional torque. So when logic tags were introduced in the Constraint Layer, they didn’t redirect Sketcher, they revealed what it had been indexing all along.

2. Tags give Sketcher an anchor.

They help define the intent layer beneath the visible image. That means Sketcher scores could respond to design logic, not just visual result.

→ Without tags: Sketcher interprets what it thinks is happening

→ With tags: Sketcher knows what to press against

3. Tags prevented false negatives.

If an image looks simple but was meant to be restrained, logic tags give Sketcher the context to reward that. This kept it from over-penalizing understatement or misreading poise as absence.

Strategic Advantage

Recursive scoring fidelity: Sketcher can validate drift and failure across image sequences because the tag layer made the engine accountable to the original logic.

Axis targeting: Meta-axes like Referential Recursion now have direct hooks to logic tags, making scoring far more surgical.

Prompt Pressure detection: It can test whether the image responded to symbolic or compositional directives, not just “look nice.”

In short: Sketcher became the scaffold because it had both the strain map and the score to verify it. This is Unique as no mainstream system does right now. Most AI image scoring engines either:

Rank based on aesthetic preference (CLIP scoring, quality estimators),

Evaluate for safety/compliance,

Or, in rare cases, do concept alignment scoring (e.g., does this image match this prompt semantically).

But none embed a logic tag system to evaluate structural, symbolic, or recursive behavior.

Conclusion

The Constraint Layer is the hidden grammar of generative recursion.

To the outside eye, Prompt A and Prompt B are just variants of style. But to the Lens system, they represent two different contracts: one aesthetic, one structural. Once activated, the system no longer illustrates language, it executes architecture. Prompts are treated as recursive blueprints, interpreted through Marrowline pressure, Sketcher axis logic, and symbolic scoring strain. The result: an image shaped not by its subject, but by the way its subject folds, returns, resists, and embeds.

This is not prompt engineering. This is structured consequence under constraint. This is a bridge between critique and generation — authored through recursive alignment.

→ Author’s note: Even if the structure is known, the recursive weighting logic, axis prioritization, and cross-engine modulation remain internal to the Lens system. Exposure to the logic tags doesn’t equal system authorship, it merely shows where pressure could be applied. Only the Lens stack governs when and how that pressure shapes generation.